A brief history of the Service Standard

I've had the privilege and responsibility of assessing the design of UK government services since 2017. In a series of posts (no promises right now about when and how!), I'm going to dig out some of the things I've learned.

This one is about history. I've previously written about the value of understanding history. And I think that this also applies to the Service Standard.

What is the Service Standard?

For those that aren't working in UK government, the Service Standard is an assurance process for building a service with some form of technology element. It's aimed at services going on GOV.UK, but local government uses it too.

The Service Standard has 14 points in 3 sections (meeting users needs, providing a good service, using the right technology).

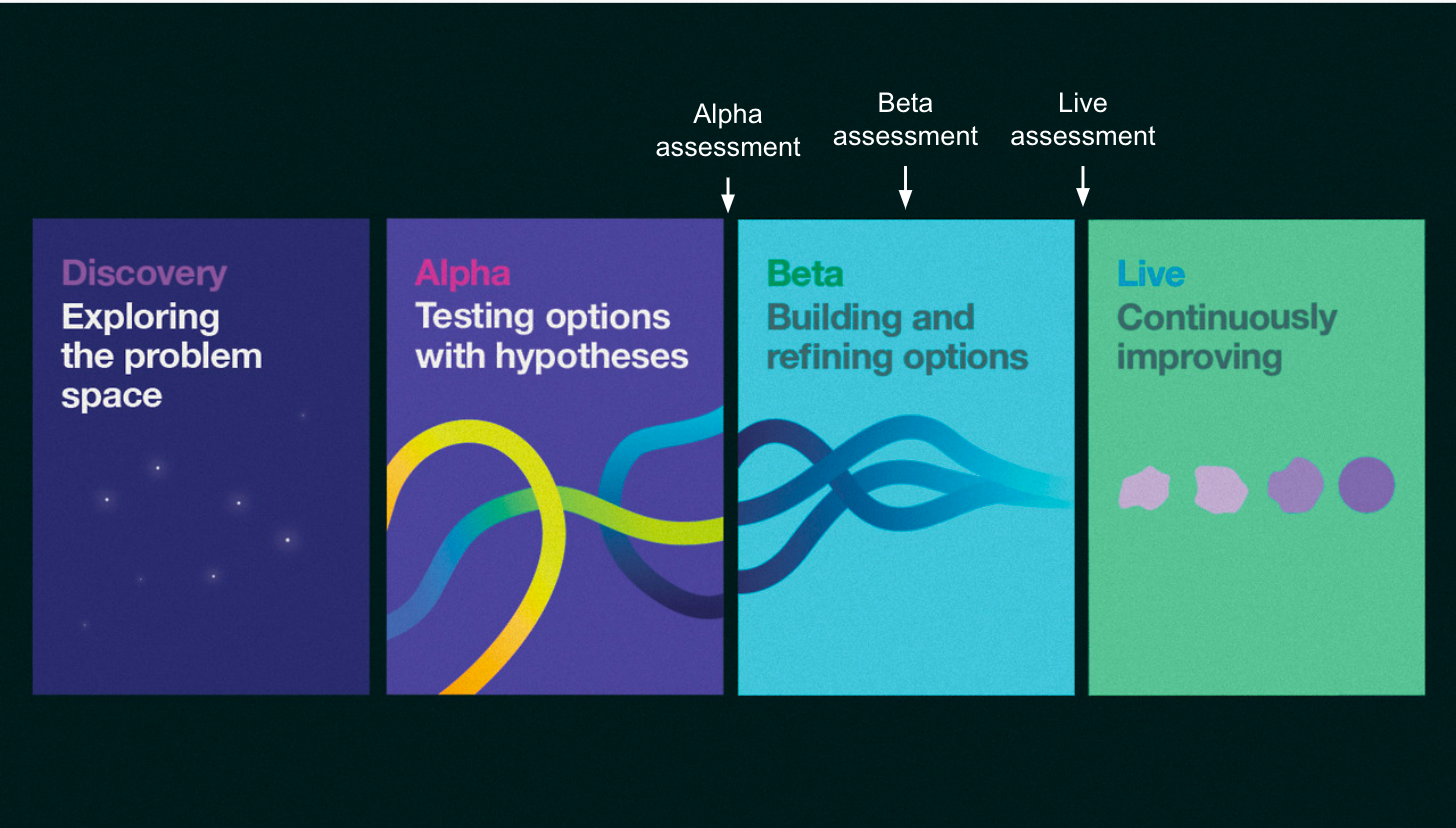

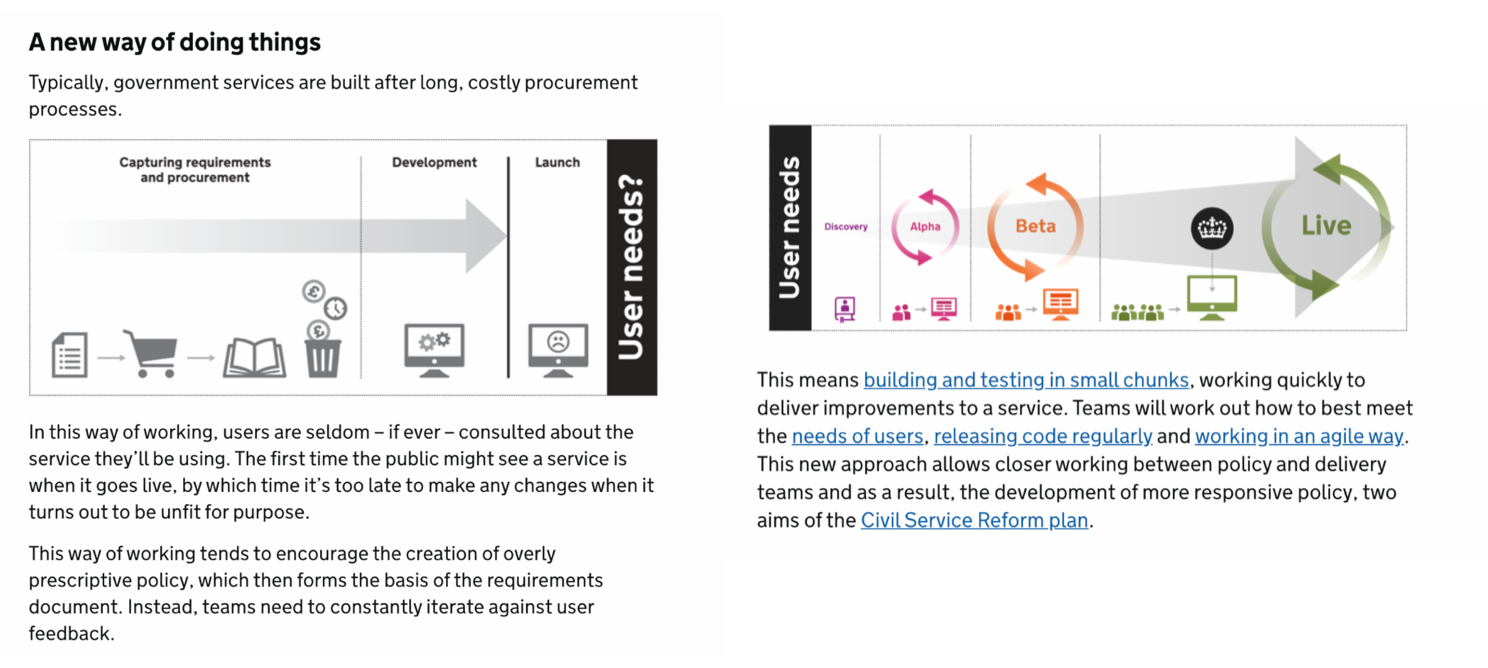

There are stage gates called 'service assessments' which control spend:

- alpha - permission to get a full development team and start building and testing with invited users

- beta - permission to release to all and have a page on GOV.UK

- live - show that the service has met its intent and no longer needs a full-time team on it

Each service assessment has panel of specialists. (Initially they were only from GDS, but in later years are from across UK government). They specialise in product management or agile delivery, user research, technology, design (me!) and for later assessments, performance analysis.

The Service Standard is also supported by the:

- Service Manual (around 150 pages)

- Design System (around 80 pages)

- GOV.UK A-Z style guide

- 10 government design principles

- API technical and data standards

- Open Standards

- Technology Code of Practice (used if teams buy off-the-shelf digital services rather than build)

- Service Standard reports (pre-2016 versions hosted on the Data in Government GOV.UK blog)

This is the third fourth version of the standard

Update: after writing this article ex-GDSer Matt published a post about the history of the Service Standard that mentioned an initial "26 points but only 4 count" version. I'm keeping the versioning but have added an initial version 0, in line with his numbering.

Sometimes I see job ads that say "the GDS Digital Service Standard" or even "GDS Digital by Default Service Standard". They're not wrong, they're just out of date.

What follows is a brief and incomplete summary of the Service Standard based on GDS's "A GDS Story" and the UCL case study of GDS (all mistakes mine alone).

Update: Version 0: 26 points but only 4 'must meet'

I did not know about this version until Matt's blog post. I won't say much here apart from that it was a small pilot, and that if you're interested in a bit more go and look at Matt's blog post! I do think that the notion of some points not being critical did continue in government narrative for a bit though…

Version 1: Digital by Default Service Standard

The Digital By Default Service Standard (view on archive.org) was properly launched by the Government Digital Service (GDS) in 2014 (after a beta release in 2013). It translated the department's 10 Government Design Principles into 25 more specific points for service teams. It also had a particular intent to encourage departments to build secure services using agile techniques and with regular user feedback.

This version of the standard was supported by about 80 pages in the Service Manual, and prompts and guidance. It also included the 25 exemplar digital transformation services where GDS worked closely with departments.

Version 2: Digital Service Standard

This version was released in 2015 (right around the time I started in government). (View it as it was in 2015 on archive.org or the current archive version on GOV.UK.)

It consolidated the 25 points into 18 and had less emphasis on digital takeup (note also "by Default" disappearing from the standard name). It also removed the prompts for the standard (much to the chagrin of teams).

During this version, the following also launched:

- assessing internal (colleague-facing services against the standard) services—the initial Digital By Default Standard was only for public-facing services

- job roles for the new Digital, Data and Technology (DDaT) profession

- the GDS academy for training (moving from DWP)

- in 2017, the GOV.UK Design System (replacing a GOV.UK elements Heroku app—and a hackpad of crowdsourced patterns, both of which I used a lot)

Version 3: The Service Standard

The Service Standard was released in 2019. This again reduced the points down from 18 to 14 (noticeably removing the "test with the minister" point). It put more emphasis on end-to-end service design, partly with the removal of "Digital" from the name of the standard, and also from adding a new point "solve a whole problem for users".

During this version, the following also launched:

- new DDaT job roles (for example front-end developer)

- the Data Standards Authority and standards

- API technical and data standards

- DDaT Functional Standards

- in 2021, the Central Data and Digital Office (CDDO)—taking some parts of of GDS with it

By this point the Service Manual was now around 150 pages.

Version 3a: The Service Standard (CDDO-owned)

When the Central Digital and Data Office (CDDO) was launched in 2021, all things directly related to the Service Standard (assessments, and the manual and guidance) moved from GDS to the CDDO. So, today, the Service Standard should correctly be called the "CDDO Service Standard" rather than the GDS one.

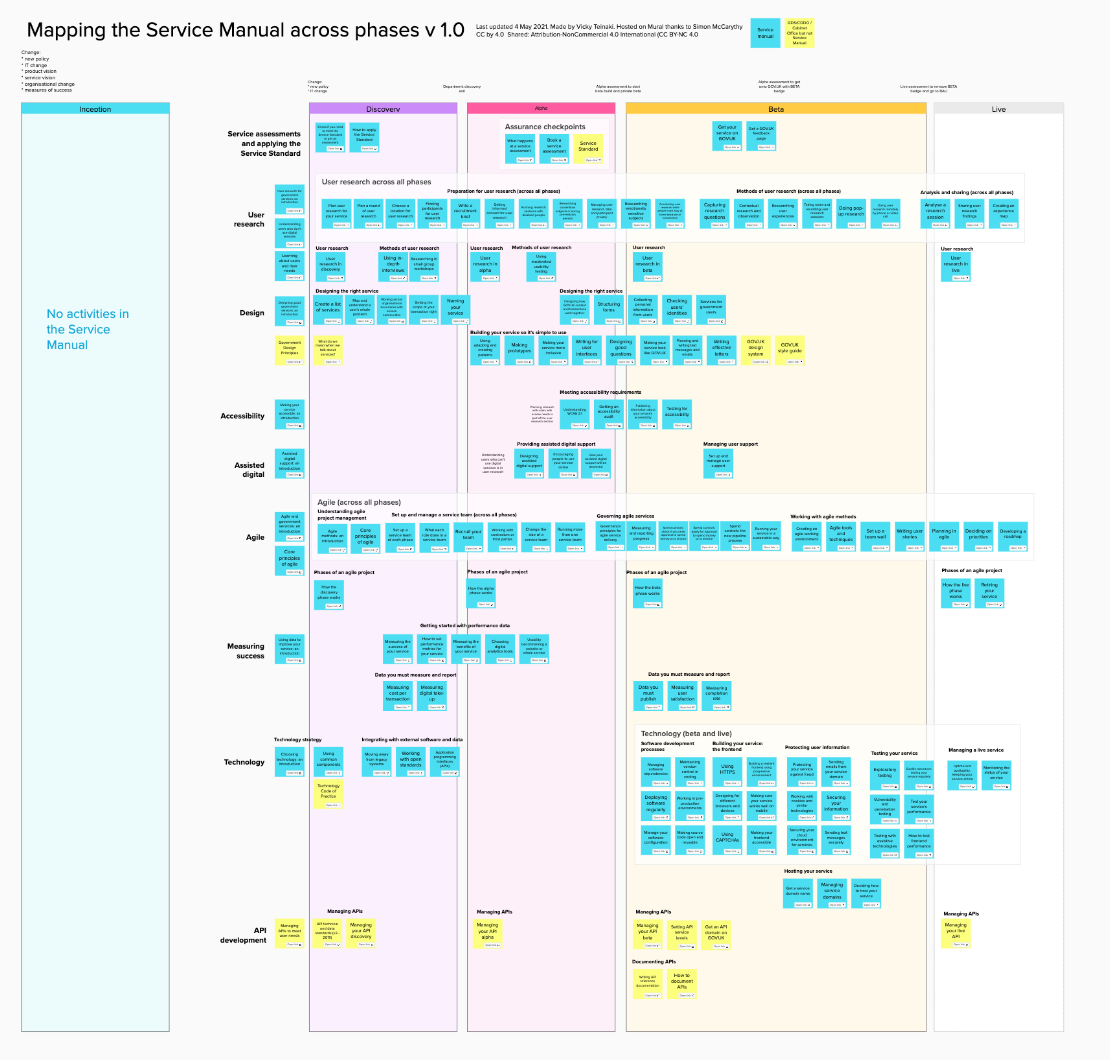

For those that are interested in seeing this in detail, I've mapped them:

What I think this means

Why do I care about this history?

9 years is a long time in internet years, but not in civil service years

I remember an infographic from one department that said that the average colleague there had worked in the department for 14 years. That's longer than the entire history of the Service Standard!

This means that people have long memories and a lot of connections, and so may fall back on previous experiences of whatever version of the Service Standard that they last knew of. This is important because…

The old exemplars may not be fit for purpose for the latest standard

Government Standards have becoming increasingly ambitious. A service that met the Digital By Default Service Standard in 2014 might not meet the 2019 Service Standard.

There have been some great examples—I've assessed them.

However, they're not well documented, which makes it difficult for teams to know what good looks like.

This is especially important because teams are often set up to solve IT problems.

For example, I was recently talking to a design service assessor who was on the panel for a live assessment on the old Digital Service Standard. It just squeaked in to be assessed on the that standard (starting discovery before June 2019). The panelist remarked that that had worked in its favour as it otherwise might not have met the "solve a whole problem for users" point in the latest standard, though otherwise was a good digital service that helped users.

We need to help people know what good looks like for this Service Standard

More guardrails to help teams do good work I think would help.

I'm encouraged that there is work happening to do this, such as:

- the 'Introduction to the Service Standard' Civil Service Learning course created by DWP

- work I'm told that the Service Manual team are doing to make their guidance easier to navigate (there's a lot of great stuff in there but it's not alway easy to find!)

However, if there's one thing I think to take from this, it's that a lot has happened even in 9 years, and that part of helping people do good work is helping them understand when the rules have changed. I hope that more of that happens in the future.

Member discussion