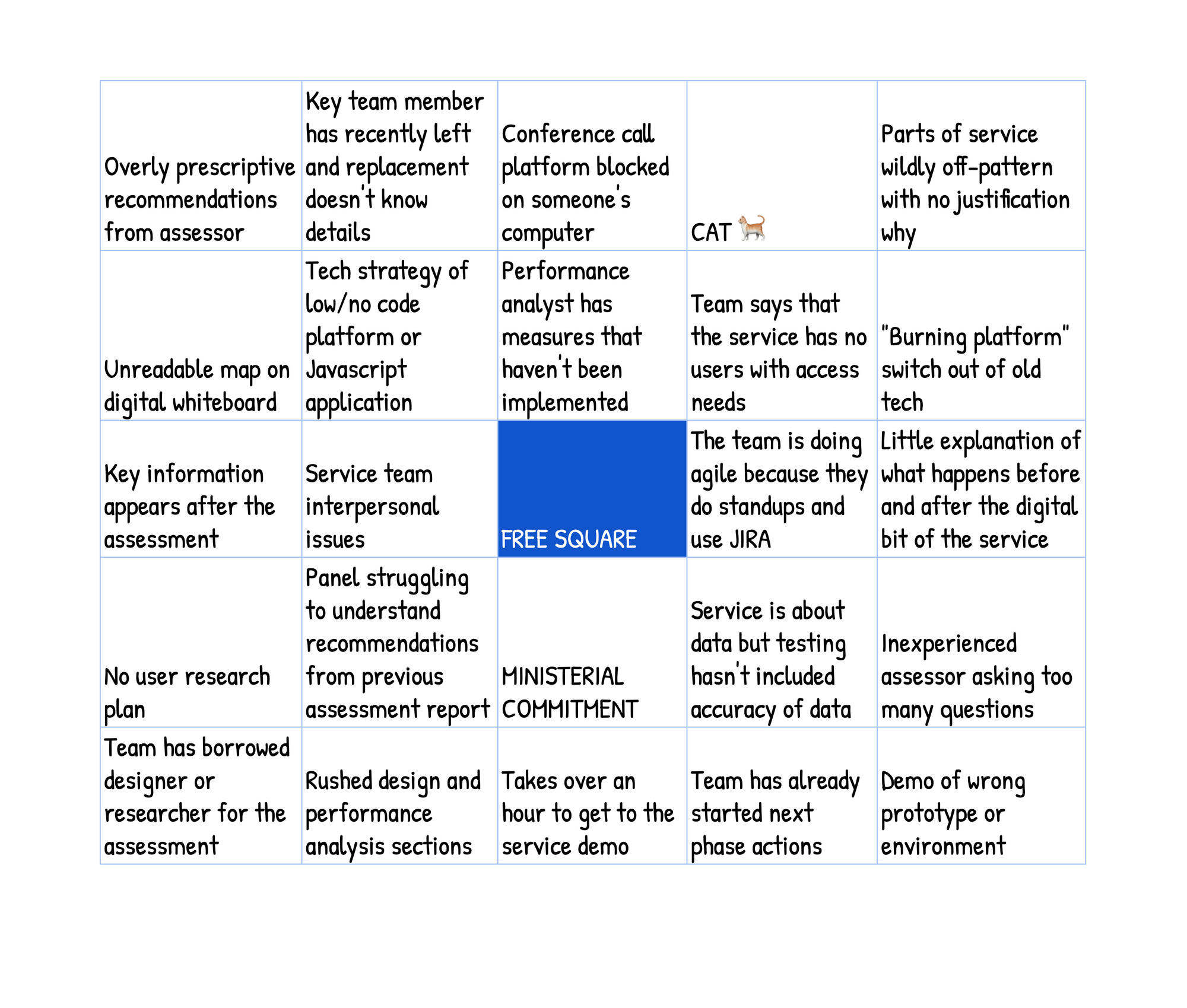

Service assessment bingo - 2023 edition!

It's been nearly 4 years since my first version of service assessment bingo: a playful take on service assessment anti-patterns from both service teams and assessors. Ishmael from DWP pointed out that a lot of things have changed since then: not only have we switched from in person assessments (usually slowly cooking in an cramped and over-peopled meeting room in London) to virtual ones, but we've also switched from the Digital Service Standard to the Service Standard.

So, for Services Week, I decided to made a 2023 version.

There's also a spreadsheet version available.

This was based on my own observations of being a panel member for maybe 30 service assessments since 2019. I did a call for suggestions on Twitter and incorporated some of the suggestions into the amends

What I changed

- Unsurprisingly a lot more virtual stuff. Rather than tiny printed pictures and meetings running over, we now have tiny unreadable digital whiteboards, someone's work computer blocking the conference call platform, and the ever mischievous cat. Sessions are less likely to run over now as people have other meetings, but bits can run over (change from just user research runing over to design and performance analysis being squeezed), and a new addition - key information appearing after the assessment by email.

- Other demo quirks - Anne suggested people showing the wrong demo or assessment deck, which is definitely something I've seen more since we've moved to remote sessions. Simon also mentioned getting an hour (actually 2 hours) into the assessment without having the demo, which can be a combination of the team over-explaining and the assessors asking too many questions.

- Accessibility - from WCAG 2.0, we now have people saying that they don't have users with access needs.

- Service intent - two different reasons for change which can be concerning: 'burning platforms' (as in not only is something legacy tech but it has to be moved off immediately). And, a suggestion from the MINISTERIAL COMMITMENT (a suggestion from David Potts) - less about the commitment but how much the team is able to align this with user needs.

- Tech and delivery - rather than panels not understanding the tech part, we've now got a lot more use of more use of low or no-code platforms, which is causing some headaches for assessment panels (is it the Service Standard? Technology Code of Practice?), particularly when it means that the team can't easily use GOV.UK Frontend. Another addition is that the team has already started next phase actions in the assumption that they'll get a 'met' (another one which always happened and is just being added here).

- Ways of working - performance analyst not being invited has been changed to data being important but not tested (a riff of a suggestion from Pete about performance analysts being thrown under the bus by the team) - progress? Maybe? I've also added situations where the team has borrowed a designer or researcher for the assessment.

- Assessor anti-patterns - from just being nice in person but damning in the report, we now have overly prescriptive recommendations. I've also changed no one reading the briefing report to the panel struggling to understand previous recommendations. (Both of these anti-patterns are things that I cover in my blog post about being a responsible service assessor).Panel member talking too much is now more specifically about an inexperienced assessor asking too many questions.

What hasn't changed

A few things got feedback from people that I should keep them:

- Key team member has recently left and replacement doesn't know details (sorry Stephen!)

- Parts of service wildly off-pattern with no justification why (validated by Anne)

- The team is doing agile because they do standups and use JIRA

- Little explanation of what happens before and after the digital bit of the service

- No user research plan

Would have included if I had more lines

A couple of nice extra that I couldn't quite add in:

Panel member posts message for panel eyes only in the assessment meeting chat.

— Anne Ramsden (@Anne_Tweetings) March 17, 2023

Nice philosophical talk. No actual evidence has been presented.

— Tom Dolan (@tomskerous) March 17, 2023

All the acronyms and panel struggling to remember them. The pain is real. Plus, of course, the legacy hand

— Debbie Blanchard (@DebBlanch44) March 18, 2023

Thanks all for your suggestions!

Member discussion